· Chuck Conway · Programming · 3 min read

C# 8 - Nullable Reference Types

Microsoft is adding a new feature to C# 8 called Nullable Reference Types. Which at first, is confusing because all reference types are nullable… so how this different? Going forward, if the feature is enabled, references types are non-nullable, unless you explicitly notate them as nullable.

Microsoft is adding a new feature to C# 8 called Nullable Reference Types. Which at first, is confusing because all reference types are nullable… so how this different? Going forward, if the feature is enabled, references types are non-nullable, unless you explicitly notate them as nullable.

Let me explain.

Nullable Reference Types

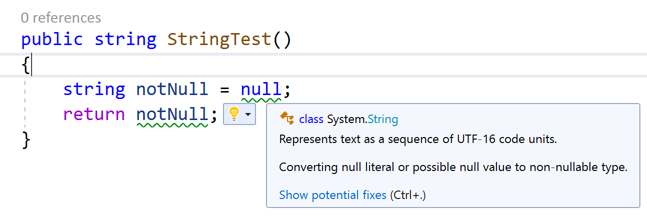

When Nullable Reference Types are enabled and the compiler believes a reference type has the potential of being null, it warns you. You’ll see warning messages from Visual Studio:

And build warnings:

And build warnings:

To remove this warning, add a question mark to the back for the reference type. For example:

To remove this warning, add a question mark to the back for the reference type. For example:

public string StringTest()

{

string? notNull = null;

return notNull;

}

Now the reference type behaves as it did before C# 8.

This feature is enabled by adding <span class="inline-code"> #nullable enable </span> to the top of any C# file or adding <span class="inline-code">lt;NullableReferenceTypes>true</NullableReferenceTypes></span> to the .csproj file. Out of the box it’s not enabled, which is a good thing if it was enabled any existing code-base would likely light up like a Christmas tree.

The Null Debate

Why is Microsoft adding this feature now? Nulls have been part of the language since, well the beginning? Honestly, I don’t know why. I’ve always used nulls, it’s a fact of life in C#. I didn’t realize not having nulls was an option… Maybe life will be better without them. We’ll find out.

Should you or should you not use nulls? I’ve summarized the ongoing debate as I understand them.

For

The argument for nulls is generally that an object has an unknown state. This unknown state is represented with null. You see this with the bit data type in SQL Server, which has 3 values, null (not set), 0 and 1. You also see this in UI’s, where sometimes it’s important to know if a user touched a field or not. Someone might counter with, “Instead of null, why not create an unknown state type or a ‘not set’ state?” How is this different than null? You’d still have to check for this additional state. Now you’re creating unknown states for each instance. Why not just use null and have a global unknown state?

Against

The argument against nulls is it’s a different data type and must be checked for each time you use a reference type. The net result is code like this:

var user = GetUser(username, password);

if(user != null)

{

DoSomethingWithUser(user);

} else

{

SetUserNotFoundErrorMessage()

}

If the GetUser method returned a user in all cases, including when the user is not found. If the code never returns null, then it’s a waste guarding against it and ideally, this simplifies the code. However, at some point, you’ll need to check for an empty user and display an error message. Not using a null doesn’t remove the need to fill the business case of a user not found.

Is this Feature a Good Idea?

The purpose of this feature is NOT to eliminate the use of nulls, but to instead ask the question: “Is there a better way?” And sometimes the answer is “No”. If we can eliminate the constant checking for nulls with a little forethought, which in turn simplifies our code. I’m in. The good news is C# has made working with nulls trivial.

I do fear some will take a dogmatic stance and insisting on eliminating nulls to the detriment of a system. This is a fool’s errand, because nulls are integral to C#.

Is Nullable Reference Types a good idea? It is, if the end result is simpler and less error prone code.