Hi, I'm Chuck.

I explore the technical depths and everyday impact of AI, and as a father, I'm particularly interested in how AI shapes the next generation.

I explore the technical depths and everyday impact of AI, and as a father, I'm particularly interested in how AI shapes the next generation.

Posts

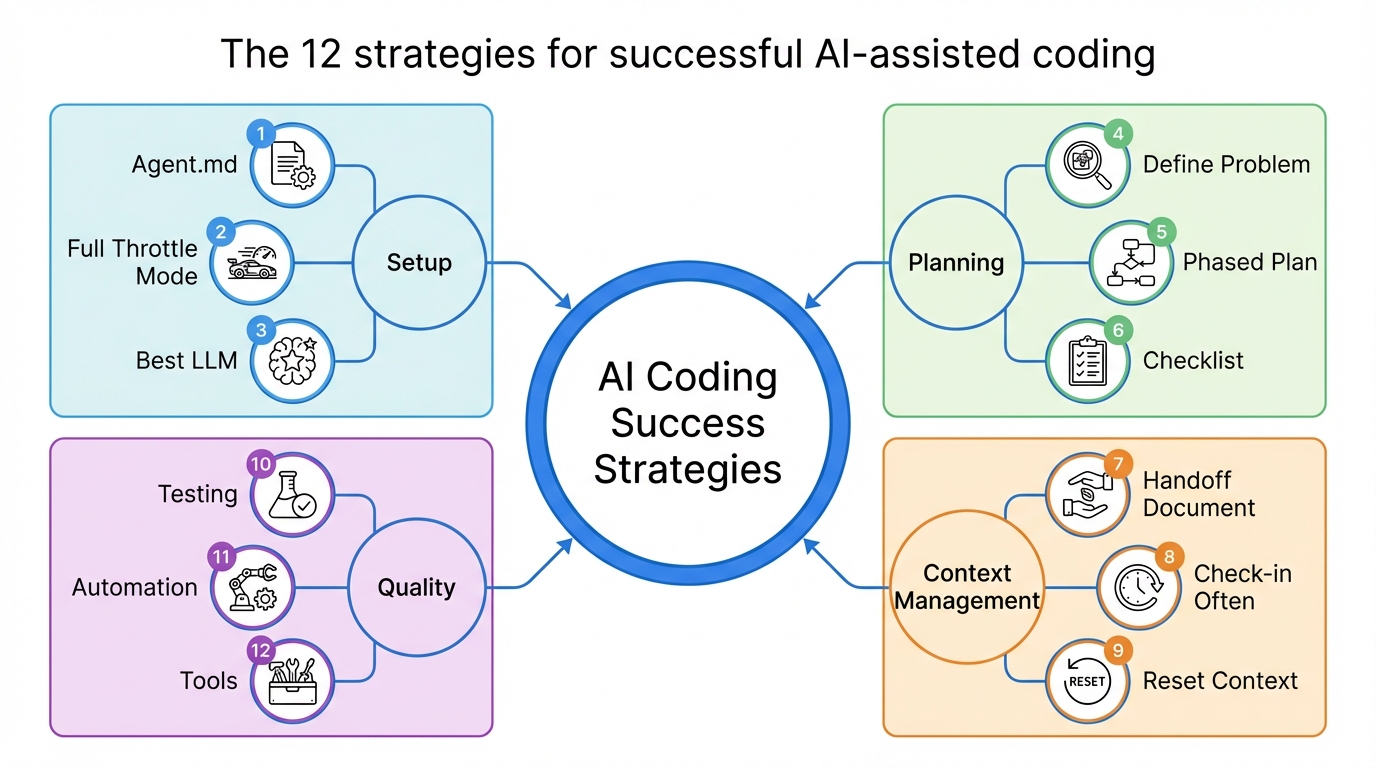

AI built a feature in 47 minutes, which would have taken 8 hours of work a few years ago. But here's what nobody tells you: without the right strategies, that s…

MIT study reveals AI weakens critical thinking in young adults. Here's how I'm teaching my 3-year-old to use AI as a tool, not a crutch.

Leaving your job? Three simple steps can transform an uncertain transition into a strategic career move—and most professionals overlook the most crucial one.

While dependency injection is a must-have in Java and C#, its role in Python raises intriguing questions about when—and whether—to use this powerful design patt…

Discover five practical ways to leverage generative AI tools like ChatGPT to enhance your coding workflow and boost development productivity.

Chuck spills the tea on 30 years of career lessons, revealing surprising truths about toxic coworkers, the myth of loyalty, and why your family should always co…

Have you ever needed to modify a file locally without committing the changes to the remote repository?

Insight into pre-pandemic and post-pandemic job hunting.

Centralize your data integrity to ensure consistency across your organization.

When leading a team, it’s important to create an environment where everyone feels safe to express their ideas regardless of their experience level.